Building a full-stack website generator with agentic workflow (part 1)

For some reason, I wanted to understand how frontend works. Too embarrassed to ask total noob questions, and too lazy to study the fundamentals, I threw all my questions at the patient chatGPT teacher. To fully “visualise” how states flow from page to page at the frontend, I thought I should build something simple. There was only a small problem though … I can’t code any frontend language and I’ve never built anything full-stack before, so … how do I start?

I decided to ask LLM every step along the way. And this became a full-stack website generator, version 1.

If you are intrigued to see how the agent works (delightful most times, but frustratingly stubborn on occasions), watch this video below. If you want to understand how the agent was built, read on.

What you see in the video is an agentic workflow. LLM codes each file in a logical sequence, iteratively through all 46 files in the app repository (the app repository came from LLM suggestion too). At the end of every mini-step, it pauses to ask for human feedback. You can revise the current mini-step output by giving your command, go back to revise a previous step, or simply ‘ok!’ing along. If you are like me when I first started (couldn’t understand much of what’s going on), I’d suggest go ‘ok!’ all the way. You’ll get a rough idea of how different parts work together in a full stack app through this process. You’ll also have to deal with lots of debugging at the end unfortunately, trivial and critical. But you can just copy paste in the error messages to the debug Q&A agent, it should help you solve many of these problems and it can be a great learning process.

This is how the agentic workflow looks like at a high level:

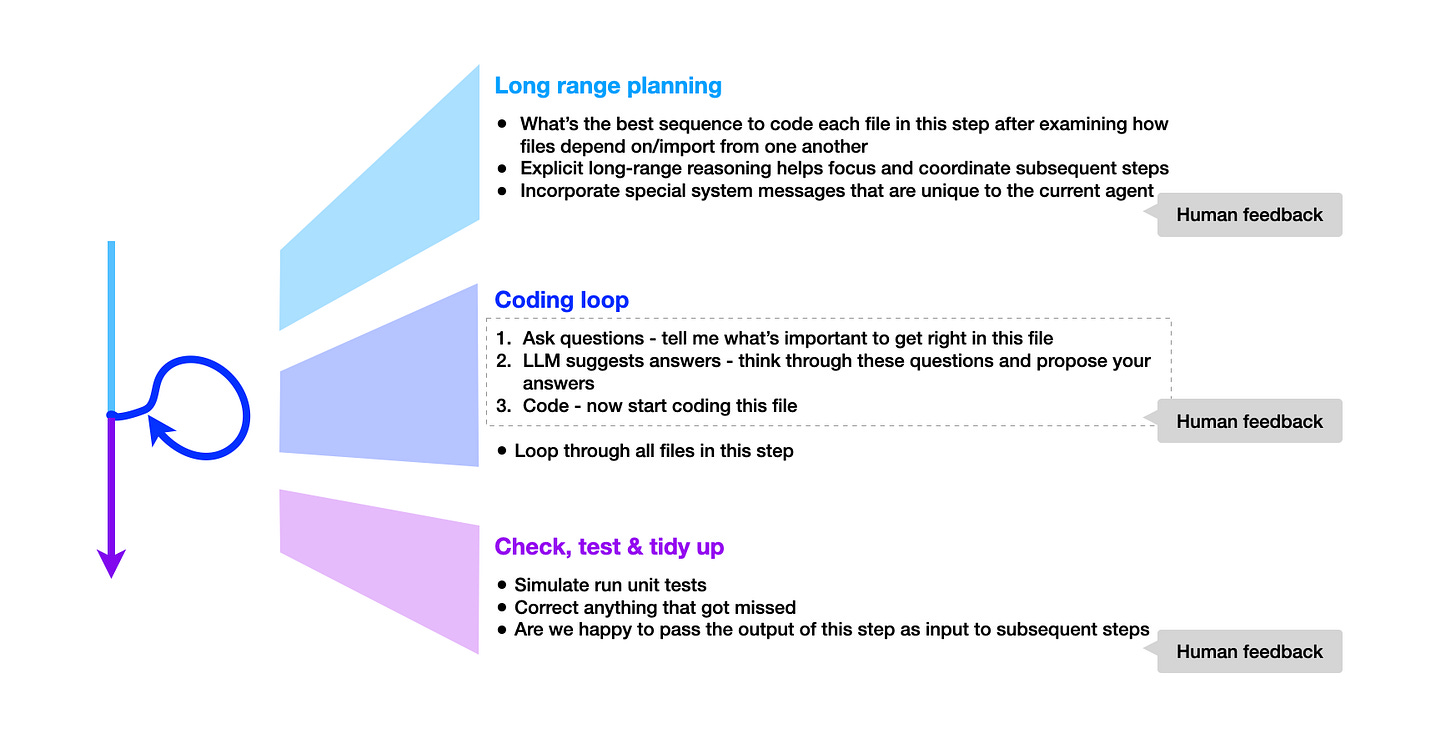

And going one level down:

Why I decided to separate the repository into 4 parts is because they require different long-range reasoning and special system messages. These are things I picked up along the way of this project, I’m sure a good software engineer will be able to write much better prompts to tell different agents how to think long term for their task and what intricacies to focus on.

This is how the final output looks like. I didn’t get this website working straight away from the coding flow. The Q&A agent at the last step was very helpful at debugging all the errors that came up while I was trying to spin up and run the app. And you can see literarily, it’s hard to teach AI good design :) … yet at least

The entire coding process (including me going through codes, giving agent feedback, sometimes wrestling with its stubbornness) took 1 hour; debugging took another 6 hours. OpenAI charge? $0.39! It may not be news but still makes me marvel every time, how efficient AI is. If you are a programmer, this can probably help scale your productivity 100x. If you are a ceo, this probably means you can build a super lean team with subject matter experts as long as they know how to develop/use their own agents, which means your cost is super lean too. If you are the ceo of a competing or legacy company to this type of company, your product will be way too expensive, god bless you.

If you are interested to see my code, I’ll be uploading it to my Github. There are more parts to this project I want to build so bear with me please.

For now, there are a few learnings on building agentic workflow that I want to synthesise by writing, and share with anyone who’s also interested in the field.

How to build a better agent

If you think of an AI agent as an individual contributor, how can you improve its quality of work? Setting aside non-technical considerations, I think there are 3 core dimensions.

Subject matter expertise

Better context setting

Hire someone smarter

Subject matter expertise is typically imparted via prompts. In most cases, telling the agent who he is and what he is good at should be enough. You can add RAG if you want the agent to have access to certain knowledge bases or tools if you want the agent to perform specific actions (e.g. search internet). I think using a fine-tuned model specialised in certain tasks can be interesting too (i.e. coding model to code, UI/UX model to design the look and feel if there’s such a fine-tuned model).

It’s important to give agent the correct (and the correct amount of) context. Again, you can do that via the system prompt. However, context evolves (as work gets done there’s more context), and may be different every time too (coding a dating app will be quite different to coding a flight booking app). Good context setting involves “knowing” as well as “thinking”. That’s why at the beginning of each step, I like to ask the agent to reason and plan explicitly for the long term. And that long term plan becomes part of the context passed down to every team-member agent down the step.

Even if you can write perfect prompts and use the best memory design, in other words, you are such a wonderful boss, you taught your agent all your crafts, techniques and gave him context on everything he should know, I believe your agent would still startle you to be frankly dumb and obstinate at times. At the bottom of it, we/human don’t quite understand how LLMs think. You know it’s probabilistic not deterministic, but how can the same model be so stunningly smart a second before keeps on predicting the wrong thing (and a very simple thing too) now! Well all I can suggest is if you’ve tried your best already, perhaps it’s time to hire someone smarter instead. Use a better model in the market. After all, that’s what the investors poured hundreds of billions of dollars to fund building and you get to use it for much much less. Take advantage of that.

How to build a better agentic workflow

Now if you think of a team of AI agents, how can you improve the team’s quality of work? The following design principles have helped me a lot.

Clear vision (for visual projects like this one)

Master plan

Good architectural design

Timely feedback

Fall back mechanism

By clear vision, I mean it literarily. I find words an inefficient describer for form. Try describing a curve. How about describing a shape made up of curves like a button, how many words would you use? What’s known as picture description is a highly abstracted concept. Translating it back into picture is a process open to numerous interpretations. To get good form in the end, I think the best approach is to start with form (not words) as prompts. But this point is probably only applicable to projects like mine here, where there is a visual output.

For most workflow, it’s a good idea to have a master plan. Especially for complex task with many steps, planning decomposes a big goal into manageable sub goals, then approach them in a logical sequence. Chaining nodes of higher accuracy together results in a more robust system overall, with better final output.

In my first attempt building the website generator, I used a linear approach. I didn’t group anything together, the generator would move from coding file 1 to file 2 … until it completes file 46. According to the master plan, that’s what I should be doing. But it’s not the best architectural choice. I realised while some files depend on others in a sequential manner (e.g. database depends on data structure), others work with their counterparts in parallel (e.g. frontend page and component pairs). Grouping things together the right way is akin to finding the best path to solution. And designing a good workflow architecture comes from domain experience and insights.

A simple element in agentic workflow that I use a lot is the human input. It can be a feedback or fall back mechanism. As a feedback mechanism, it’s especially helpful after the planning steps. Since the plan will guide subsequent agents, I want to make sure it is correct. I also use it as a chatbot at the end of the agentic flow to help me debug any errors that come up while spinning up the website. However, I think a much better approach would be if the agent has direct access to all error messages and console logs via some sort of integration. I haven’t figured out this one yet.

As a fall back mechanism, you can use human input to gate critical steps. Happy with everything? Allow workflow to continue. Want revision? Go back. In my workflow demo video, I have a simple fall back mechanism that permits me to go back only a single step. That means if I find something wrong later down the chain, it would be almost impossible to rewind and fix it. Think I’ll include a more flexible fall back mechanism in the next iteration.

That’s all for this post. Thank you for reading all the way till the end. Let me know if you think of anything interesting~