Could AI design if it can see? Building frontend with LLM's visual capabilities

Full-stack website generator series part 3

In part 1, we went through using AI to build a full-stack website end to end. My number one regret was the frontend. While it looked clean and functional, it’s far from what I requested in my prompt (I asked for something modern and cheerful like the Figma website 😬).

This made me realise how inefficient words are as a describer for form. To get the look I want, I should perhaps prompt LLM with the picture I had in mind. This post summarises my experiments and learnings with LLM’s current visual capabilities. My conclusion? Good design is HARD! Interested to see the details? Read on.

Can LLM copy form?

This is probably the easiest design task. Let’s run a few experiments.

First, let’s ask LLM to replicate image of a single physical object. It’s not too bad.

Now let’s try something that’s non-physical, more abstract. Maybe a composite shape like a search bar on a website. What do you think? It looks more functional than elegant isn’t it? For copyright restrictions, LLMs cannot replicate the exact design; however, in the generated image, the spacing, curvature, shadow, weight and balance—subtleties that amalgamate into beauty—are somewhat missing.

Can LLM reflect on form?

If LLM can see its generation and the reference side by side, would it be able to pick up these subtle differences and iterate a better version? Let’s sent back the 2 images.

If you read the reflections by LLM, you’ll see it indeed picked up some of the most prominent differences including round buttons, icons next to labels, spacing and alignment. But it translated none of those reflections meaningfully in the revised design.

What could have caused this enigmatic discrepancy? I think I understand it now after reading more on how the vision model works. You see, the current vision models are trained to take image (and text) inputs and generate text outputs. They are fundamentally still “language” models, hence suffer the same pain when translating words back into form. These vision language models think in token predictions; not shape, size and proportions.

Will AI be able to create good design?

I can only postulate. I’m by no means an AI expert but I’ve always been deeply fascinated by the nature of learning and intelligence. My guess is it’s possible, definitely for something that can copy and emulate, but unlikely for a model that truly masters the spirit of design. Here’s why.

Possible. If you are a vampire who can live forever, has immense wealth, an expansive memory, and you also work non-stop (don’t ask me why you have to work non-stop if you are already immensely wealthy); do you think you can become a decent master in any craft you want? I’d say probably yes. And that’s similar to how models are trained today, with tremendous amount of resources, data, computing, energy, money.

Question is, would you train a model for design? I think some company definitely would, because design is such a big market. Adobe alone generated $21bn revenue, $5bn profit this year. OpenAI spent $100mn to train GPT-4, which is only 2% of Adobe’s net profit.

Then why did I say it’s unlikely that we’ll see a model who can truly masters the spirit of design? Because it’s probably very very hard. To train a model, you need to tell it what’s the correct answer and incentivise it to predict that. Training a model to copy and emulate is not that difficult because the correct answer exists (although there are ethical considerations). But when it comes to design or art in general, the solution space is infinite. There isn’t one definitive design that stands out as correct while others are deemed incorrect. How would you construct the reward function in this case?

Design is also context-dependent. While most airports are designed for efficiency (security upfront, direct pathways, utilitarian furnitures), Singapore’s Changi Airport is designed to feel warm and inviting, a destination in itself, because it’s important to create memorable experiences wherever possible for such a small country less endowed with natural attractions. To train a model that truly understands design, all these contexts, high to low levels of considerations need to be part of the inputs. It’s very difficult to construct such a dataset at scale.

The concept of beauty has an evolutionary origin. In nature, beauty is often associated with symmetry, health, and fertility, which are indicators of reproductive fitness. It is rooted in the sensory and emotional experiences of living beings. To design well is to appeal to the emotions. Would AI have to be sentient first before it can perceive beauty? Perhaps creating good design may require more than intelligence—it may require a soul.

How will AI transform design?

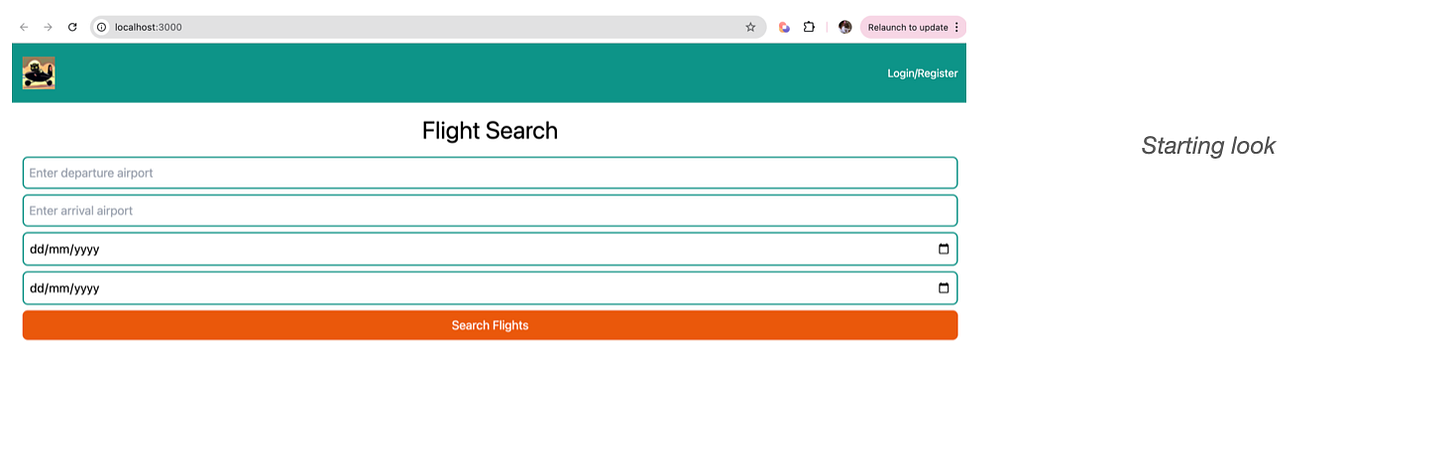

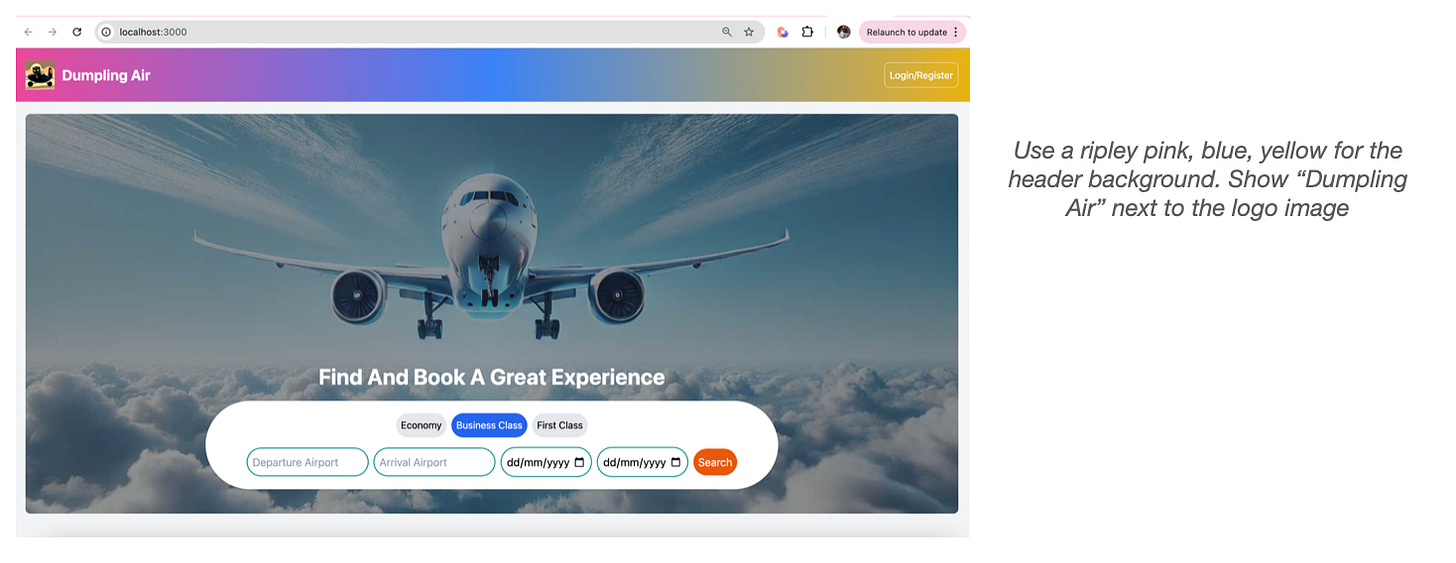

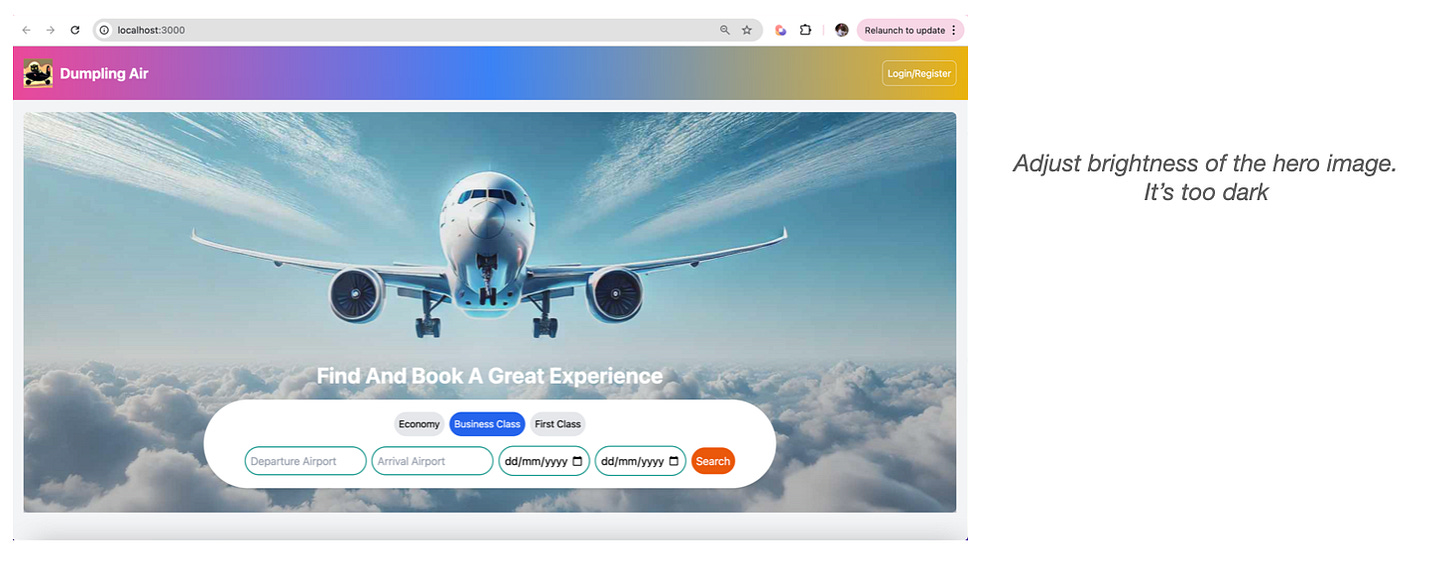

I’m of the view that AI won’t replace human but design in the age of AI will not be the same. It will be faster, easier and cheaper. It will super charge the master craftsman and enable even untrained individuals to manifest their ideas into tangible forms. To end this post and answer to the title building frontend with LLM's visual capabilities, I include below screenshots of how the frontend of my flight booking app evolved. I didn’t have to write a single line of code or sketch a single design, only fed GPT-4o mini image and text prompts as stated next to the screenshots.

That’s all for this post. Thank you for reading till the end~