Purrfect fun: generating AI portraits of my cat with DreamBooth and LoRA

The little black cat you see on the thumbnail images throughout this blog is my cat Dumpling.

While softwares like MidJourney has made producing AI images a breeze, off-the-shelf solutions can’t generate pictures for a specific entity. In simple words, although image models understand the concept of a “cat”, they don’t understand the concept of “dumpling the little black cat”. You can however, teach that specific concept to the model via a famous approach called DreamBooth. And it can be a very efficient process too using LoRA, as it fine-tunes only a small portion of the model weights. End result? A text-to-image model that inherits the world view of its mother model, but also understands whatever you wanted it to recognise. I had lots of fun training Dumpling’s DreamBooth, and the best part is it costs $0 all thanks to Modal’s free compute starter plan.

I’ll cover the training process briefly. We’ll look at how the image outputs evolve as we increase training steps and vary learning rate. A lot of what I do is tinkering (I’m not ML/computer engineer/data scientist by training), so I will document down what I think can be improved and experimented on in future iterations. That’s all for this post, then in the next blog I’ll do some ComfyUI stuff and try combining 2 LoRAs together, so that I can have myself and Dumpling in the same AI generated picture.

Part 1: How to set it up

The key ingredients for me are:

Modal for serverless computing, since I don’t own any GPUs.

Stable Diffusion XL model (SDXL) as the mother model. I also tried Flux.1 dev, but it somehow generated very gory images. Maybe I should increase the number of pictures in the training set, or Flux.1 dev isn’t great with animals. The models are downloaded directly from HuggingFace Diffusers’ Github.

Training pictures that capture the essence of Dumpling. I used only 6.

(Optional) Weights & Biases for tracking.

Here’s my full script. I adapted it from this Modal example. My edits are really trivial, mainly on the serving part of the code as Modal’s raw script didn’t work for me, also I added in parts that would allow me to run automated inferencing with different saved checkpoints later to find the best fine-tuned LoRA for Dumpling.

Part 2: Pick the best LoRA

When I first tried DreamBooth, I strictly followed the hyperparameters set in the Modal example (80 steps at 1e-4 learning rate). It worked really well, especially in capturing Dumpling’s demeanour and expression when rendered in anime styles. But it also gave Dumpling an extra tail or leg from time to time and Dumpling (cat) is often seen with bountiful of dumplings (food).

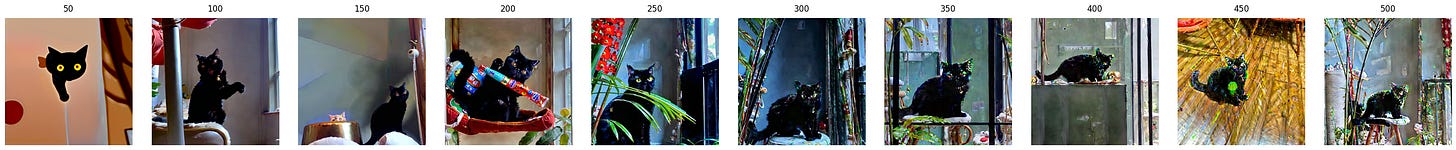

Curious on how a longer training cycle and different learning rates could change the outputs, I tested training the model at 4 different learning rates (1e-4 to 4e-4), each for 500 steps, and saved checkpoints after every 10 steps. Below are snapshots of output generated at every 50 training steps with the same simple prompt “dumpling the little black cat with golden eyes”:

LR = 1e-4. Images mostly in cartoon-style. Signs of over-fitting from step 400 onwards

LR = 2e-4. Images are more life-like from step 150. Signs of over-fitting from step 300 onwards. Badly over-fitted forms from step 400 onwards

LR = 3e-4. Images are more detailed, life-like, around step 100. Starting to overfit from step 200 onwards

LR = 4e-4. Feels very similar to outputs at 3e-4. Best images around step 100-150

LR 2e-4, 3e-4, 4e-4 all produced some pretty realistic depictions of Dumpling, so I’m going to shortlist the promising checkpoints and do more visual inspections. I picked the following checkpoints, and asked ChatGPT to generate me a list of text prompts to illustrate different drawing styles.

LR 4e-4 checkpoint 130

LR 3e-4 checkpoint 170

LR 2e-4 checkpoint 270

Below are the outputs at our shortlisted checkpoints. “0” - the first picture of each row is control at the original hyper parameters (LR 1e-4, 80 steps of training):

Although I was happy with the original model output, looking at this does convince me it’s worth the effort to experiment with different learning rates and save more checkpoints at multiple steps of the training process. All of the shortlisted checkpoints produce pretty good results. My favourite is 170 (LR 3e-4).

Part 3: Thoughts on improving it

Crop out the background from Dumpling’s training images. Or figure out how to caption each training image, e.g. “dumpling the little black cat sitting on a cat castle behind plants” for image_1, “dumpling the little black cat lying on the ground with a red ball”

Test out different learning rate schedulers. Right now it’s linear

Try Flux.1 again with 20 training pictures and >= 2000 training steps

That’s all for now. In the next post, I’ll combine 2 LoRAs together (dumpling and me) in ComfyUI. Stay tuned!