My first AI agent: analyse GitHub repos for genAI trend

It's been close to 2 years since OpenAI launched ChatGPT. Has the genAI hype died down? Where is it heading towards? I built a LangChain agent to:

Identify the top 100 repos in the last year, and year prior

Search the internet to find what these repos do

Compare the lists to see if % of repos on genAI dropped over time and what the latest trending genAI repos are about

First, I’ll talk about how I built the agent. Then, results and insights. Last, learnings from building this agent and ideas for improvement. It’s my first AI project, welcome any thoughts and comments!

Part 1: The Cookbook

I used LangChain (LangGraph to be specific) because it seems more versatile, you can define precisely what you want to build with LangChain vs. other frameworks like Autogen.

For data source, I used Cybersyn which hosts a free GitHub dataset on Snowflake Marketplace. Side note, Cybersyn’s founder Alex was head of Data Science at Coatue, which explains why I find their datasets so relevant for many of the things I wanted to do.

For LLM, I simply used GPT-4o-mini for everything.

When you first connect an AI agent with a database, it has no idea what the database is about. Just like a human analyst, it needs to explore around, understand a bit more on the data and structure there. In this case, I set up the agent to acquire: all table names, table descriptions, column names & data type under each table, and number of rows (as an approximation to how big each table is, which could impact table join decisions later on). These informations are then pieced together as “database_details”, and would form part of the system prompts for LLMs later.

If you are new to AI agents, you might ask what’s the difference between an agent and a LLM (large language model). That’s a great question, we’ll come back to it after going through the cookbook. For now, let’s continue building.

After the agent knows more about the database, it can translate your natural-language questions into SQL queries. This is magic, but at the level when Harry just started Hogwarts. Simple questions work so much better than complicated ones. For us, we are going to ask “find me the top 100 repos by total stars in the past 1 year” and the model should summon magic through her wand.

Cybersyn provides GitHub repo names, ids and star counts, however it doesn’t contain any descriptions on what the repos do. So I’m going to ask the agent to search the internet on each of the top 100 repos. This is done by giving the agent a search tool, I used Tavily with 1000 free searches a month, but you can also use others like DuckDuckGo which is completely free.

Part 2: Where GenAI is heading towards

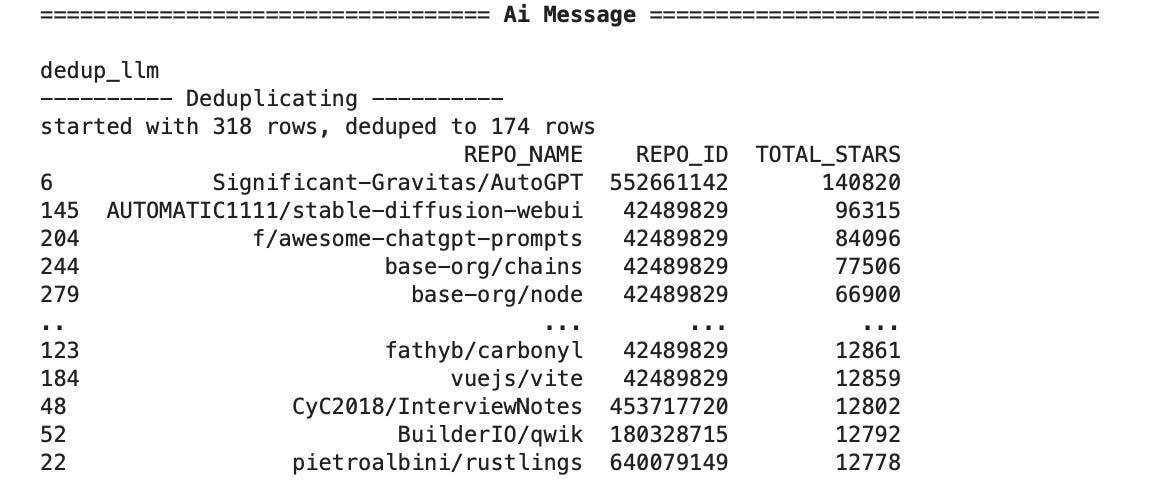

Now we have all the information, we can ask the agent to analyse for us. Well, in theory we can. Problem here is genAI models are probabilistic, not deterministic in nature. They are not that great with categorising questions like ours out of the box. But human are flexible, we work around problems. With a little sacrifice to precision, I asked the agent to “sort the list and give me the top 50 repos on genAI” (change the question to sort by probability). Here are snapshots of the list for the last 1 year and year prior. You can see there are a lot more agent-related repos gaining popularity (coding/software dev especially) in the past 12 months, whereas the year prior to that was mostly about chat and prompts. Probably a lot slower than most people anticipated, but steadily genAI is heading towards applications.

Sep23 - Aug24

Sep22 - Aug23

Part 3: Learnings and ideas for improvement

I started this project because I am curious about how good generative AI is today. I experimented with easier-to-use platforms like Coze (chatbot dev) late last year and felt quite disappointed. That’s why when I started testing with LangChain I was really impressed! While the model still makes mistakes, they hallucinate less, can start using tools, and carry out tasks with a certain degree of complexity. Far from AGI, but edging in the right direction.

My learnings:

Keep things simple

I started by wanting the agent to do too many things. Besides querying the database with natural language and internet search, I also wanted it to edit results, plot charts, and learn from past SQL mistakes. Instead of making the agent “powerful”, it made the agent “confused”. A work around is to specify in exact words what you want the agent to do next. But it makes the agent feel more stupid to interact with. Maybe a hierarchical agent architecture and better memory management could help.

Include reflections but do not rely on reflections

LLMs do not get 100% of SQLs correct. I wanted the model to become better at writing SQL queries by reflecting on its past mistakes. Result? When it worked it worked, model became smarter, error got fixed. However, it doesn’t come out with the correct reflection all the time! So reflection helps to improve reliability but doesn’t solve for it.

Include human in the loop

Human input can be crucial in at least 2 ways. First, to help the agent course correct, because current technology still has a significant error rate and that makes the entire process (chain) brittle if just left to AI. Second, when it involves subject expertise which a generic LLM lacks. On the point of subject expertise though, I think a fine-tuned model is the way forward, so I’m looking to test it out in later experiments.

Struggle with numbers

It’s hilarious how LLMs are bad at maths. Simple functions like sorting and counting can be a challenge if the input is long and you are not using a more tight scripted method to help the model. Here, I used pandas to convert the LLM output into data frames to sort, filter for unique results etc. I’m curious to test other models for their reasoning capability. OpenAI just dropped their strawberry (but not available as API yet), perhaps Alibaba’s Qwen2?

Not that easy to scale up

You know when people say it’s easy to get a prototype up and running, but so difficult to productise a genAI application. 200%! I could feel it even scaling up from tiny to small, i.e. asking my agent to give me the top 10 repo list vs. top 100. It started making mistakes at all places I’ve never thought of before. Editing a list of 10 repos? Piece of cake. Make the list 100? I got 105 back lol. I think because fundamentally these models are probabilistic machines, dealing with length is an exponentially difficult problem for them.

LLMs are so cheap

I’m not kidding. Although I’m only playing around, I’ve been using OpenAI everyday. Guess my bill? $1.96 for the past month. Thank you all the VC money.

Now back to the question, what’s the difference between an agent and a model? This is actually the part I had to constantly remind myself while learning LangChain (coz that’s how the code needs to be structured). This is how I think about it:

A model is a brain without memory. You ask a question, it thinks and tells you the answer, but it doesn’t keep anything afterwards.

An agent is a person with skill. The person thinks with her brain, then performs actions. So you can give an agent a question in plain English and ask her to translate that into SQL (brain), then query the database to get you the answer (action).

An agentic workflow is a team. Each team member (agent) can carry out a specific task in the value chain, and all team members have access to the same context of the project. What we actually built in this project is an agentic workflow.

Okay, that’s all I had to share. Again this is my first agent, if you have any thoughts, ideas, I’d love to hear them!

Excited on what’s to come.